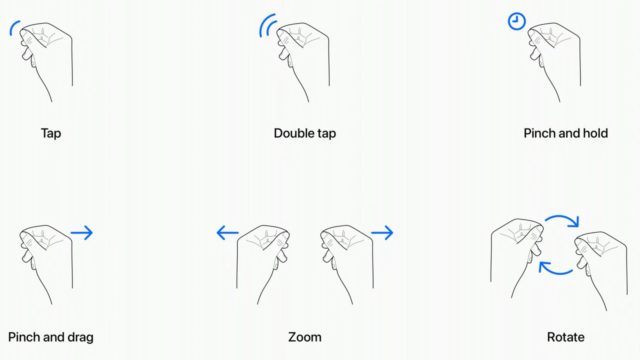

Apple Vision Pro glasses, operating without an actual control mechanism. Instead, it uses eye tracking and hand gestures to enable users to interact with objects within the virtual environment. During one of the developer sessions, Apple developers explained the specific gestures supported by the Vision Pro glasses, and explained the mechanics of some interactions.

clicking

To interact with the virtual items on the screen you're viewing, you can perform a flick by bringing your thumb and index fingers together. This gesture, also known as pinching, serves as a way to signal to the glasses system that you want to interact with a specific item, similar to tapping on an iPhone screen.

Double click

With the same gesture as above, double-tapping your thumb and forefinger together will start the double-tap gesture.

Press and hold

The pressure and hold procedure is similar to the long press on the iPhone, which serves purposes such as selecting and highlighting text, or the size of windows can be adjusted by dragging the corners of the window.

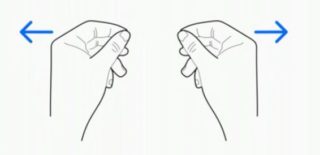

Tweak and drag

Pinch and drag actions serve multiple purposes such as scrolling and moving windows. You have the ability to scroll horizontally or vertically, and increasing your hand movement speed allows for faster scrolling.

Zoom

It is a commonly used gesture involving both hands, consisting of two main actions. To enlarge the screen, pinch your fingers together on both hands and then slowly spread your hands apart. Conversely, to zoom out, do the same movement but bring your hands closer.

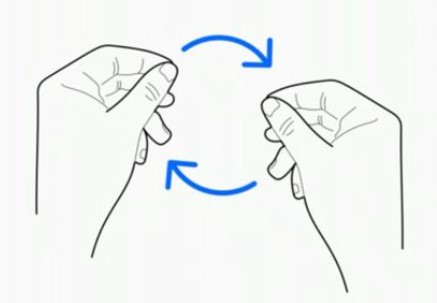

Rotation

Rotate is the other two-handed gesture, and based on Apple's blueprint, pinching the fingers together and rotating the hands will involve manipulating and rotating virtual objects as you like.

Gestures and eye movements will work together smoothly and seamlessly, with the help of the many cameras built into the glasses. These cameras will precisely monitor your direction of gaze, making eye position critical for targeting specific subjects through hand gestures. For example, when you focus your gaze on an app icon or an item on the screen, it will be targeted and highlighted, allowing you to interact with it through a gesture.

Hand gestures do not have to take up a wide space; You can simply put your hands in their natural level and do whatever you want. Apple promotes this practice, and it prevents the fatigue of placing your hand in the air. A small pinch gesture is enough to perform tasks that would normally require a tap, as the cameras can precisely monitor minute movements.

What you see lets you identify and manipulate objects near and far. And Apple acknowledges situations in which it is preferable to use larger gestures, i.e. in a larger area, to control objects directly in front of you. You can point with your whole arm and fingers to control an object. For example, if you have a safari window right in front of you, instead of using your fingers on your lap, you can reach out and swipe right.

In addition to using gestures, the Apple Vision Pro glasses can recognize hand movements such as air typing. However, those who tried the demo haven't had a chance to test this particular feature yet. Gestures will work in tandem with each other, and if you want to create a drawing, for example, you'll focus on a specific area on the canvas, choose a brush with your hand, and then make an in-air gesture to start drawing. If you shift your gaze elsewhere, you will be able to move the cursor instantly to the new location.

Although Apple has defined the six basic system gestures, developers are free to design their own gestures for their apps, enabling them to perform different actions. Developers must ensure that these custom gestures are easily distinguishable from commonly used system gestures and hand motions. In addition, they must ensure that assigned gestures can be performed repeatedly without causing hand fatigue.

In addition to hand and eye gestures, the Apple Vision Pro allows connection to Bluetooth keyboards, trackpads, mice, and game controllers. In addition to voice search and dictation tools as options.

Many individuals who have had the opportunity to test the Apple Vision Pro glasses consistently describe the control system as easy to understand without difficulty and without the need for extensive training or education. It seems that Apple's designers have designed it to work similarly to the multi-touch gestures found on iPhones and iPads, which has led to positive feedback so far.

Source:

5 comment